在学习spark的过程中发现很多博客对概念和原理的讲解存在矛盾或者理解不透彻,所以开始对照源码学习,发现根据概念总结去寻找对应源码,能更好理解,但随之而来的问题是好多源码看不懂,只跑example的话好多地方跑不到,但是结合测试类理解起来就方便多了。

forck一份源码,在未修改源码的情况下(修改源码后,比如加注释等,在编译阶段容易报错),使用gitbash进入项目的根目录下,执行下面2条命令使用mvn进行编译:

- 设置编译时mven内存大小

export MAVEN_OPTS=”-Xmx2g -XX:ReservedCodeCacheSize=512m”

-T -4:启动四个线程 ,- P:将hadoop编译进去

./build/mvn -T 4 -Pyarn -Phadoop-2.6 -Dhadoop.version=2.6.0 -DskipTests clean package

- 通过运行一个test测试是否编译完成

> build/sbt |

远程调试

根据spark的pmc建议使用远程调试运行test更方便,以下是使用远程调试步骤:

使用idea通过maven导入导入项目:

File / Open,选定 Spark 根目录下的 pom.xml,点击确定即可建立remote debugger

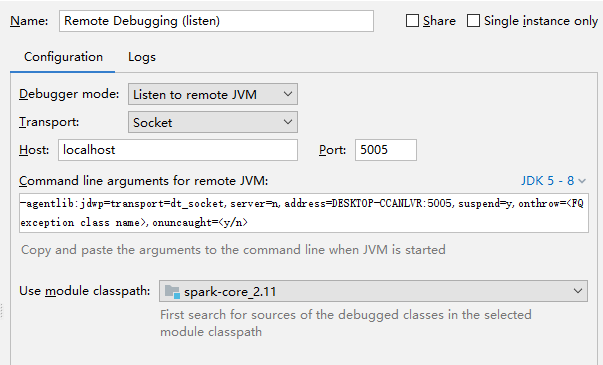

- 选取菜单项 Run > Edit Configurations… 点击左上角加号,选取 Remote 建立一套远程调试配置,并命名为“Remote Debugging (listen)”:

- 选择Debugger mode:

Listen to remote JVM - 选择Transport:

Socket - 配置完成后点击Debug,开始启动remote debugger的监听

配置完成后如下图:

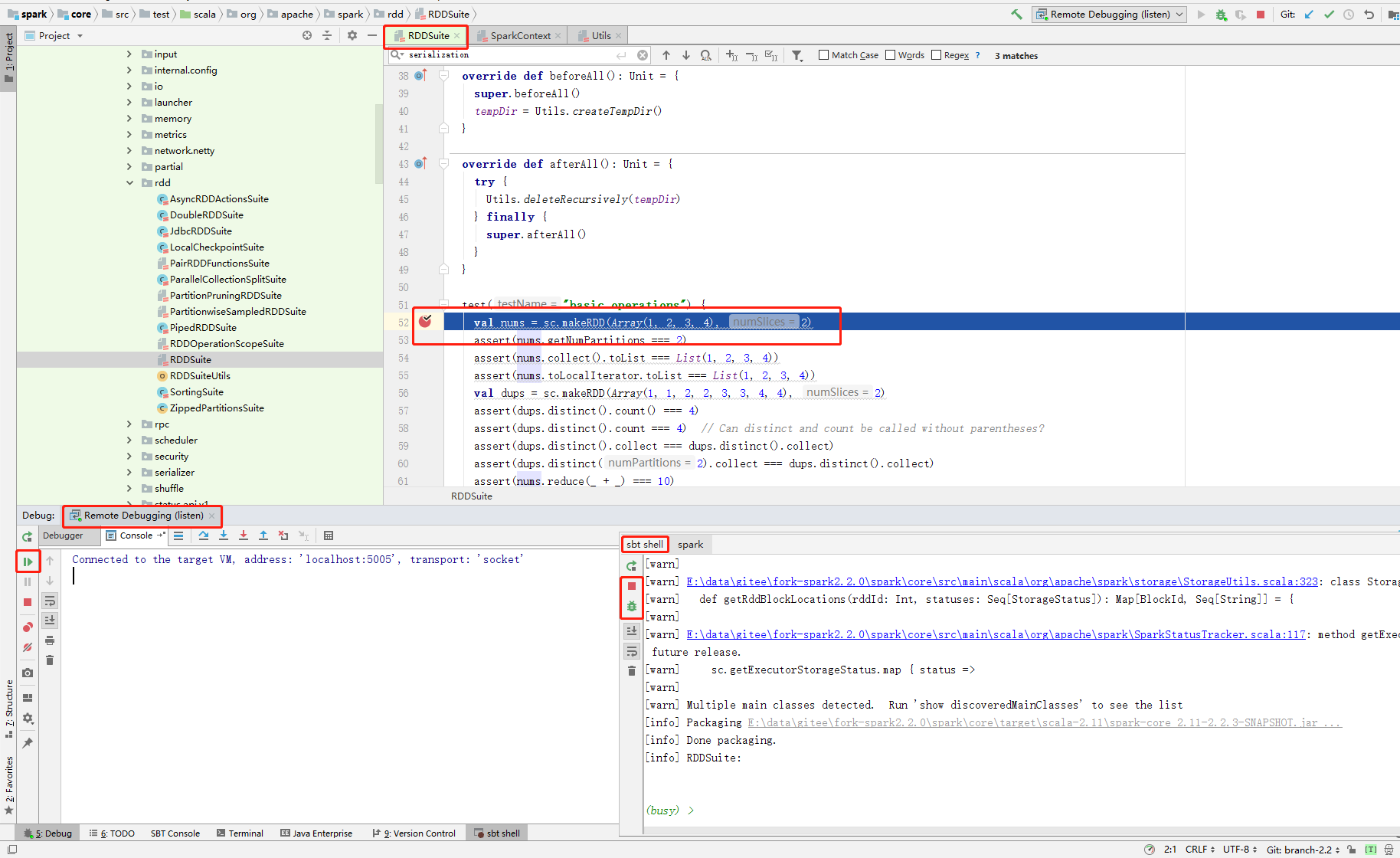

在SBT中启动远程调试,打开idea中的

sbt shellSBT 中的 settings key 是跟着当前 sub-project 走的,所以调试时需要切换到对应的模块,例如:切换到core模块,可以在sbt shell中执行以下命令

project core

设置监听模式远程调试选项:

set javaOptions in Test += “-agentlib:jdwp=transport=dt_socket,server=n,suspend=n,address=localhost:5005”

启动我们的 test case,sbt test教程

testOnly *RDDSuite – -z “basic operations”

如果我们在test调用的代码里面打断点后,就可以进行调试了,结果如图:

报错信息1

[INFO] BUILD FAILURE |

解决办法:Spark编译需要在bash环境下,直接在windows环境下编译会报不支持bash错误,

- 利用git的bash窗口进行编译

- 用

Windows Subsystem for Linux,具体的操作,大家可以参考这篇文章

报错信息2

Output path E:\data\gitee\fork-spark2.2.0\spark\external\flume-assembly\target\scala-2.11\classes is shared between: Module 'spark-streaming-flume-assembly' production, Module 'spark-streaming-flume-assembly_2.11' production |

解决办法: 项目里面.idea文件夹下,有一个models.xml文件,里面同一个文件包含了两个不同的引用。猜测原因maven编译完成后,在idea中打开时又再次选择使用sbt进行编译,结果出现了两份导致,再删掉所有.iml文件后,重新通过mvn进行编译,并且在打开时不选择用sbt进行编译解决。

报错信息4

ERROR: Cannot load this JVM TI agent twice, check your java command line for duplicate jdwp options. |

解决办法:执行了多次设置调试模式的方法set javaOptions in Test += "-agentlib:jdwp=transport=dt_socket,server=n,suspend=n,address=localhost:5005"导致,关掉sbt shell重启重试

参考: